DeepSeek Primer

Source Over the time, I will add an change.

- Introduction

- Architectural Foundations

- Overview

- Mixture of Experts (MoE)

- Multihead Latent Attention (MLA)

- Overview

- Key Features

- Evolution from DeepSeek-V2 to DeepSeek-R1

- MLA in DeepSeek-V2

- Enhancements in DeepSeek-V3

- Further KV Cache Reduction Through Optimized Compression Techniques

- Optimized Compression Formulation

- Inference-Time Expansion

- Query Compression for Activation Memory Savings

- Reduction in Activation Memory

- Enhanced Numerical Stability with FP8 Mixed Precision

- Adaptive Routing for Load Balancing in MLA

- Enhancements in DeepSeek-R1

- Comparative Analysis

- Implementation

- Background: Standard Multi-Head Attention (MHA)

- Low-Rank Key-Value Joint Compression

- Multi-Stage Compression

- Query Compression and Optimization

- Decoupled Rotary Position Embedding (RoPE)

- Attention Computation in MLA

- RL-Optimized MLA

- Computational and Hardware Optimization

- Comparative Efficiency Analysis

- Multi-Token Prediction (MTP)

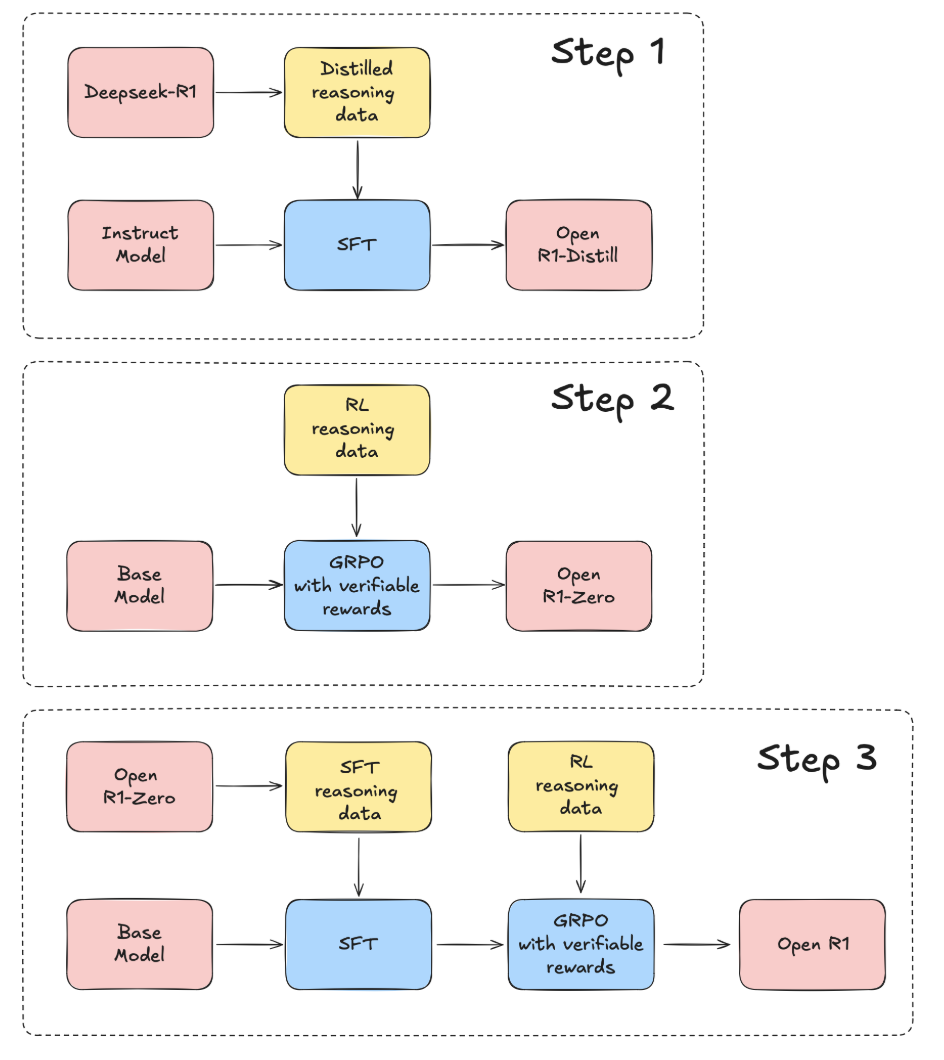

- Training Pipeline: from Pre-Training to Reasoning

- Stage 1: Cold Start with SFT

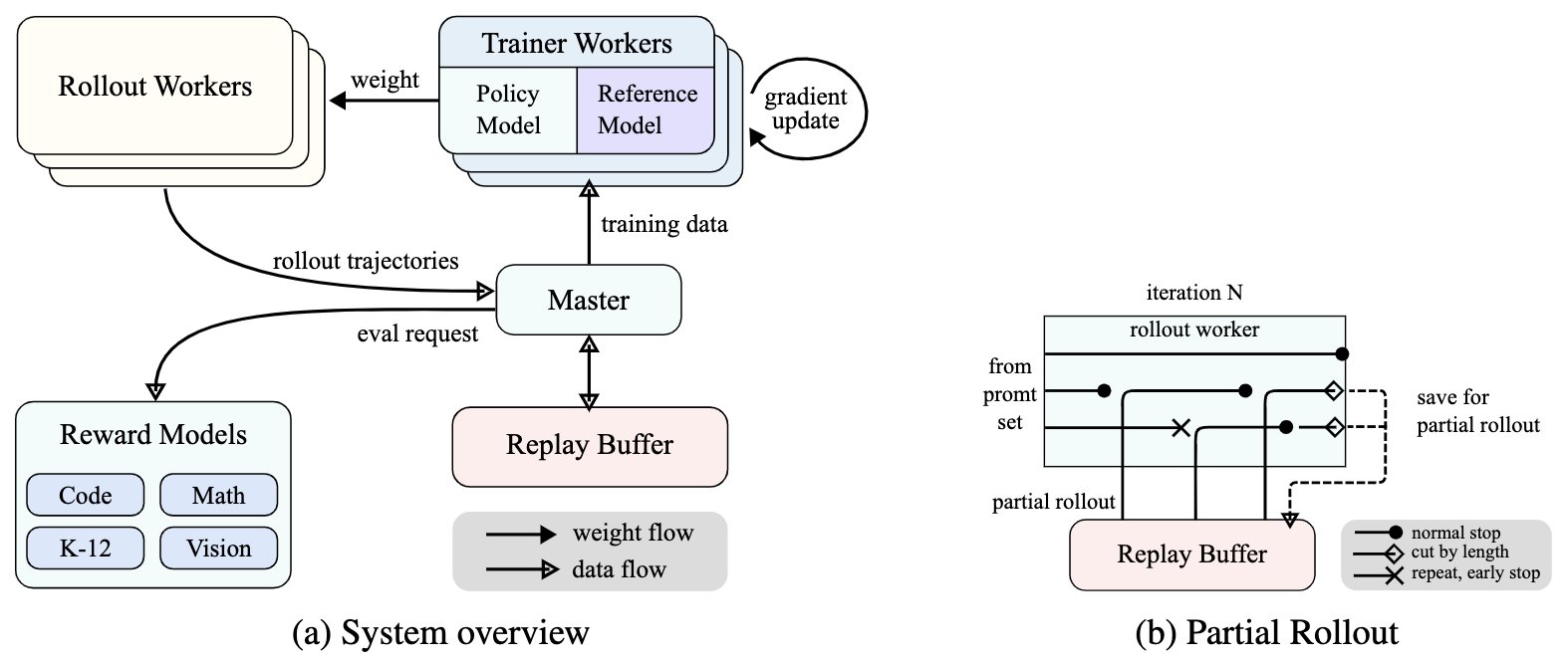

- Stage 2: RL

- DeepSeek’s RL Methodology: a Conceptual Overview

- Policy Optimization: Background

- Group Relative Policy Optimization (GRPO)

- Reward Functions

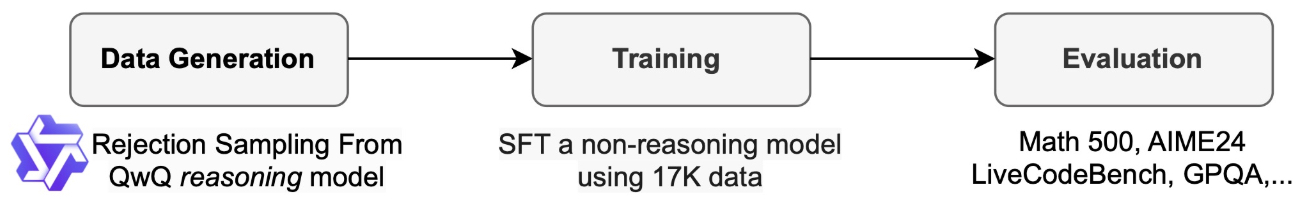

- Stage 3: Rejection Sampling & Expanded Supervised Fine-Tuning

- Stage 4: Secondary RL for Alignment & Generalization

- Comparing Training Pipelines: DeepSeek-R1 vs. DeepSeek-R1-Zero

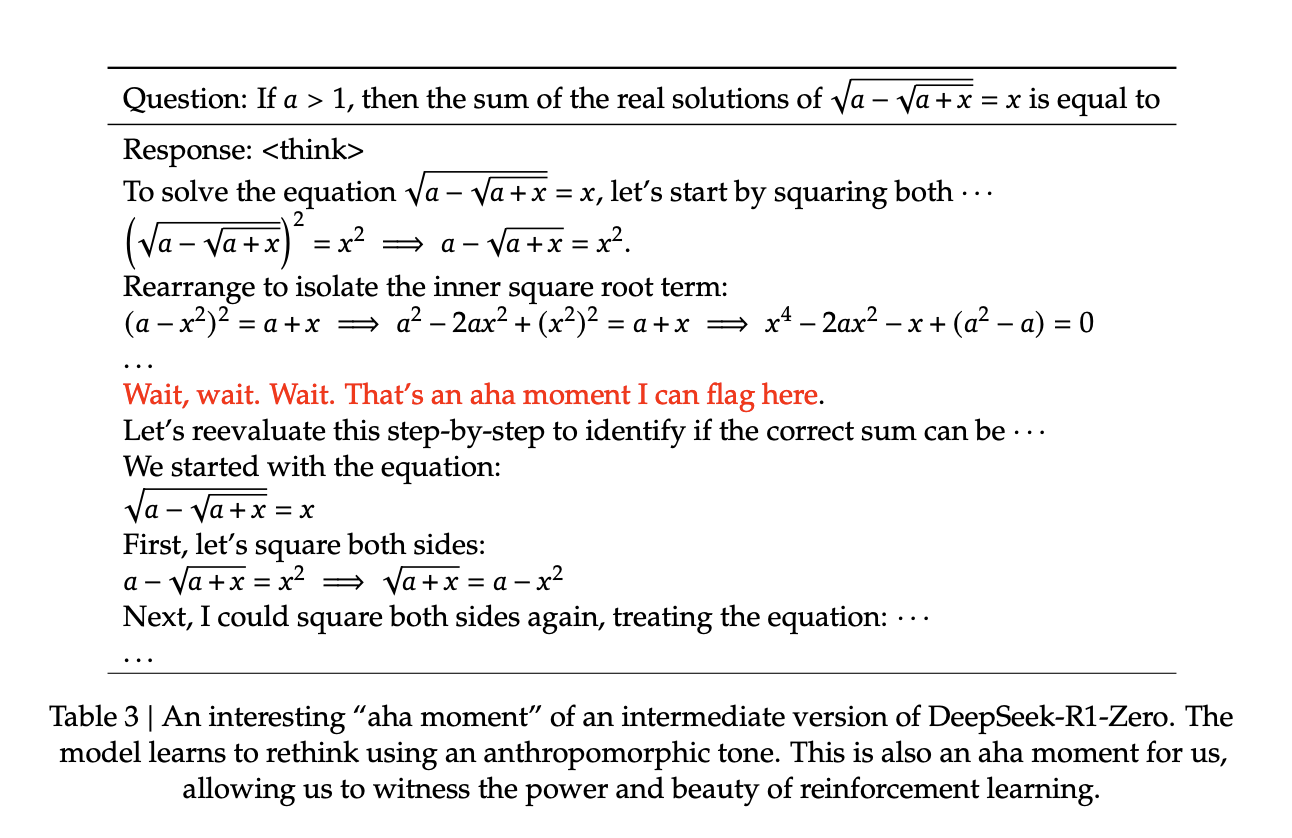

- Emergent Reasoning Behaviors

- Distillation: Reasoning in Compact Models

- Results

- Open Questions

- Other Reasoning Models

- Reasoning Datasets

- References

Introduction

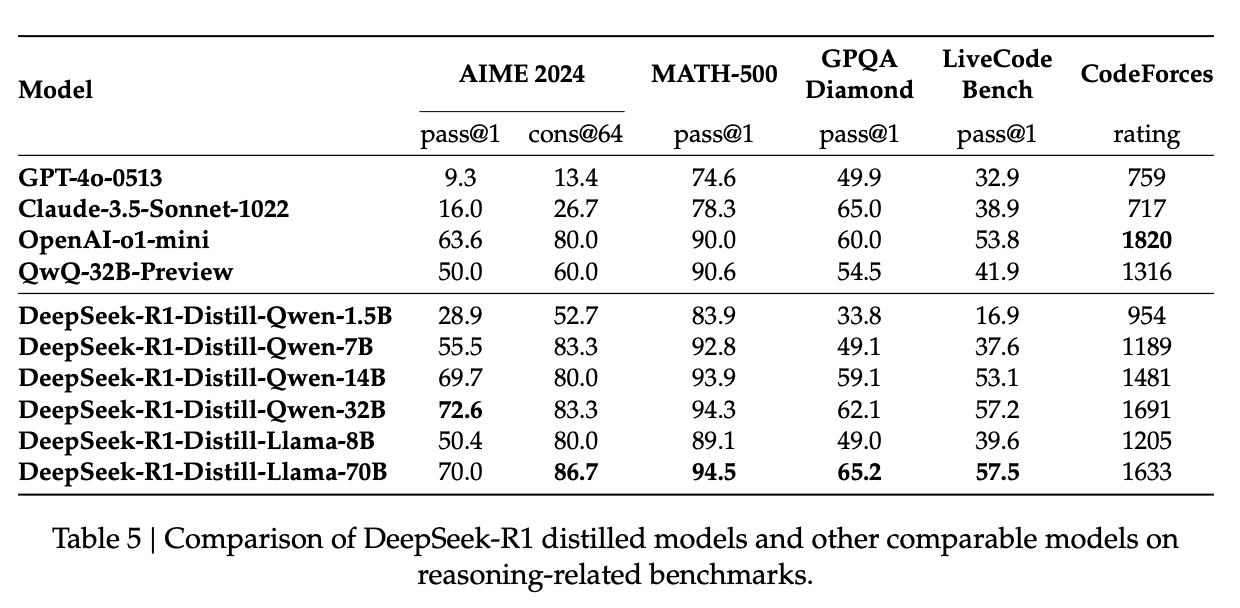

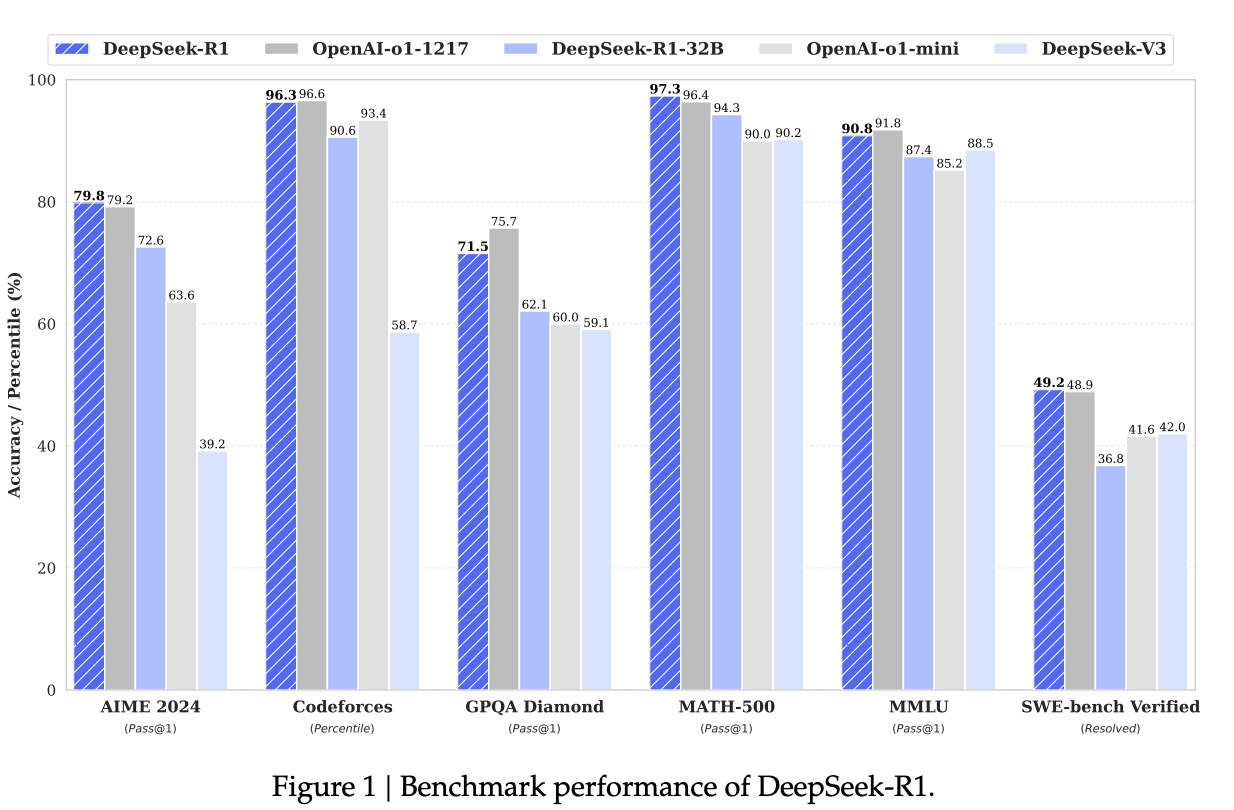

- DeepSeek-R1 and DeepSeek-R1-Zero represent a landmark in reasoning-capable Large Language Models (LLMs). Released under an MIT license, this model rivals closed-source giants like OpenAI’s o1 and o3 series while pioneering a reinforcement learning (RL)-driven framework for reasoning tasks.

- Both models leverage Group Relative Policy Optimization (GRPO), introduced in DeepSeekMath, which replaces traditional methods like PPO, making training both efficient and scalable. They also utilize Multihead Latent Attention (MLA), introduced in DeepSeek-V2, which reduces computational and memory inefficiencies particularly for long-context processing by projecting Key-Query-Value (KQV) matrices into a lower-dimensional latent space.

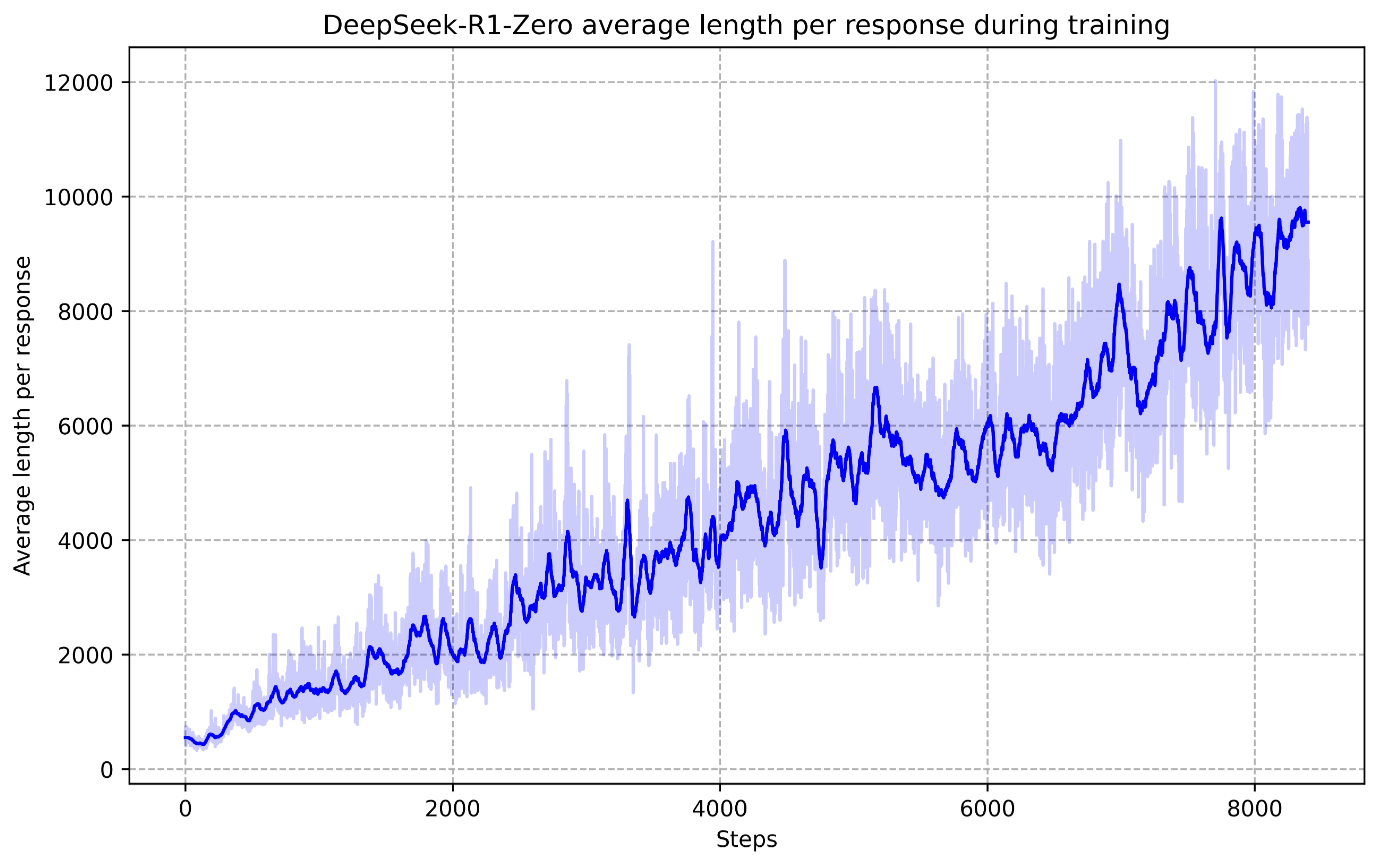

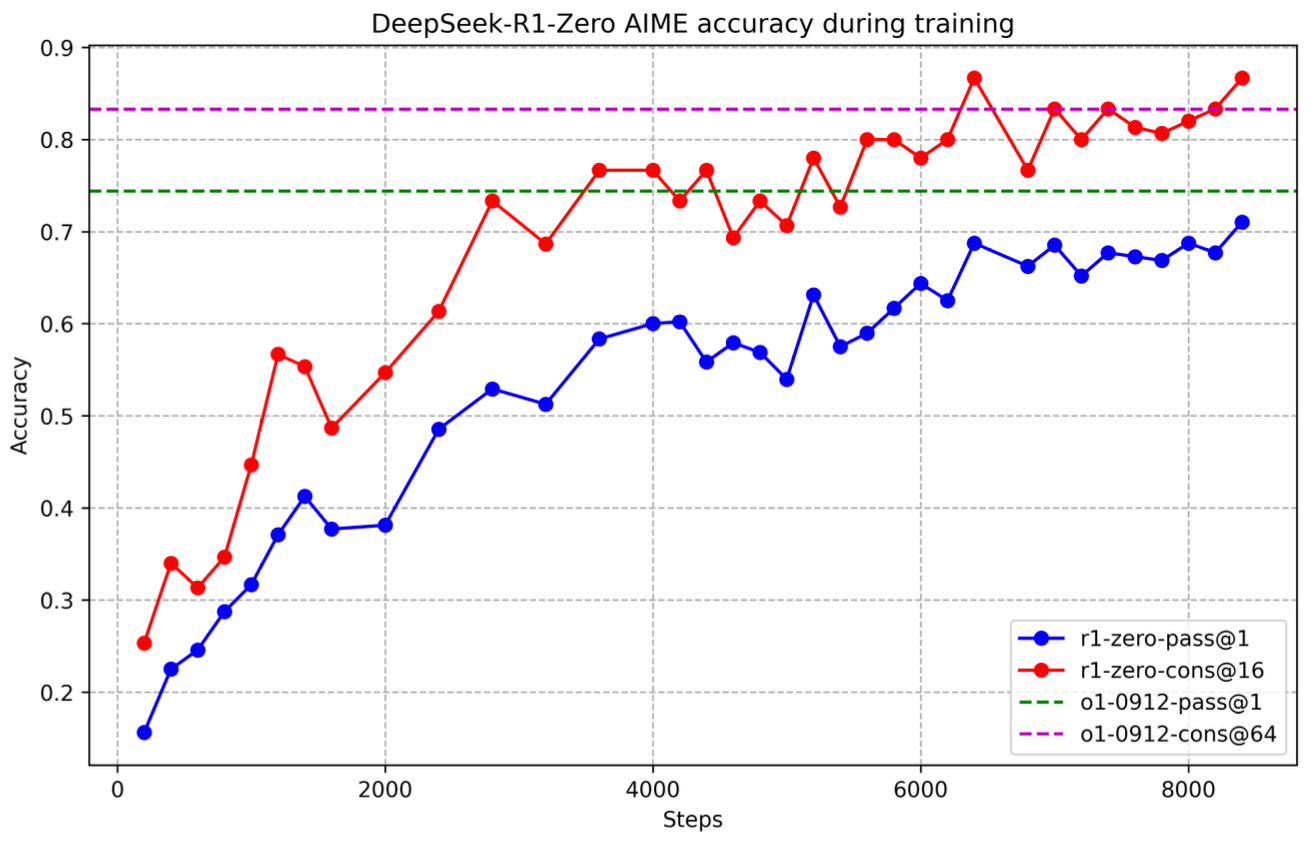

- DeepSeek-R1-Zero demonstrates how reasoning capabilities emerge naturally purely through RL without any Supervised Fine-Tuning (SFT). By relying solely on self-evolution through RL, DeepSeek-R1-Zero naturally developed powerful reasoning behaviors but also exhibited challenges such as poor readability and language mixing. DeepSeek-R1 built upon this foundation and addressed the aforementioned issues by incorporating multi-stage training and a small amount of cold-start data to improve reasoning performance and usability.

- Through innovations like GRPO, FP8 quantization, and emergent Chain-of-Thought (CoT) reasoning, both models rival closed-source models while fostering transparency and accessibility. As the research community builds upon these innovations, DeepSeek-R1 signals a shift towards efficient, reasoning-driven AI accessible to all.

- This primer explores its architecture, multi-stage training pipeline, GRPO mechanics, and emergent reasoning behaviors, alongside how distillation propagates reasoning capabilities to smaller models.

Architectural Foundations

- DeepSeek-R1 builds upon the foundational advancements introduced in DeepSeek-V2 — specifically, Mixture of Experts (MoE) and Multihead Latent Attention (MLA) — and DeepSeek-V3 — specifically, Multi-Token Prediction (MTP) — integrating cutting-edge architectural innovations that optimize both training efficiency and inference performance.

- This section provides a detailed breakdown of the architectural components that evolved from DeepSeek-V2 and DeepSeek-V3 to DeepSeek-R1, highlighting improvements that make DeepSeek-R1 a leading open-source model, capable of rivaling proprietary alternatives in reasoning efficiency and performance.

Overview

-

DeepSeek-R1 incorporates several advanced techniques to achieve remarkable efficiency improvements:

-

Mixture of Experts (MoE) Architecture: DeepSeek-R1 utilizes a Mixture of Experts model, which decomposes a large model into smaller, specialized sub-models. This architecture allows for the activation of only relevant sub-models during specific tasks, enabling the system to operate efficiently on consumer-grade GPUs.

-

Key-Value Memory Compression via Multihead Latent Attention (MLA): By implementing sophisticated compression algorithms, DeepSeek-R1 achieves a 93% reduction in the storage requirements for key-value indices, which are known to consume considerable amounts of VRAM.

-

Multi-Token Prediction: DeepSeek-R1 is designed to predict multiple tokens simultaneously rather than one at a time. This strategy effectively doubles the inference speed, enhancing overall performance.

-

Low-Precision Computation: DeepSeek-R1 employs mixed-precision arithmetic, performing a significant portion of computations using 8-bit floating-point numbers instead of the standard 32-bit. This approach substantially reduces memory consumption and accelerates processing speeds.

-

-

Collectively, these innovations contribute to DeepSeek-R1’s significant advancements in training efficiency, reportedly achieving a 45-fold improvement over previous models.

Mixture of Experts (MoE)

Overview

- The MoE mechanism selectively activates a subset of the total model parameters at each inference step, achieving computational savings while maintaining model quality. This approach enables scaling up model parameters without a proportional increase in computational cost.

- DeepSeek-R1 refines DeepSeek-V2’s MoE framework, introducing dynamic expert routing, reinforcement learning-based load balancing, and enhanced sparsity constraints. These innovations make DeepSeek-R1 one of the most efficient and scalable open-source MoE models available.

Key Features

-

Reinforcement Learning-Based Expert Routing: DeepSeek-R1 replaces static gating functions with a reinforcement learning (RL) policy to dynamically assign tokens to experts. The RL-based router optimizes expert selection by maximizing load balancing while minimizing routing entropy, leading to more efficient token-expert mapping.

-

Hierarchical Entropy-Gated MoE (HE-MoE): The expert selection process is refined with a multi-level gating mechanism. Tokens first pass through a global selection phase, followed by cluster-level pruning, and finally, an entropy-aware adjustment ensures balanced expert activation. This approach prevents expert over-specialization and improves generalization.

-

Device-Constrained Expert Allocation (DCEA): Experts are assigned based on available compute resources, reducing cross-device communication overhead. The model selects experts within a constrained pool of devices, lowering synchronization costs and increasing training efficiency.

-

Load-Balanced Expert Utilization with RL-Based Adjustments: Instead of relying on auxiliary loss functions to balance load, DeepSeek-R1 dynamically adjusts expert activation probabilities using RL-based bias terms. This ensures consistent workload distribution without additional loss penalties, improving stability and convergence.

-

Full Token Retention (No Token Dropping): Unlike earlier iterations that dropped low-affinity tokens to balance computational load, DeepSeek-R1 retains all tokens during both training and inference. This ensures that no information is lost, leading to improved model coherence and generalization.

-

Cross-Device Communication Optimization: With DCEA and hierarchical expert gating, DeepSeek-R1 significantly reduces inter-device communication, leading to up to a 35% decrease in latency. This optimization enhances efficiency without sacrificing model performance.

-

Dynamic Expert Activation: The model adapts expert selection dynamically using learned routing strategies, ensuring efficient allocation of computational resources. This allows DeepSeek-R1 to scale effectively without a linear increase in computational cost.

-

Adaptive Expert Specialization: By incorporating entropy-based constraints, DeepSeek-R1 ensures that experts remain specialized but not overly rigid. This dynamic specialization enhances both accuracy and efficiency while maintaining flexibility in expert activation.

Evolution from DeepSeek-V2 to DeepSeek-R1

MoE in DeepSeek-V2

- DeepSeek-V2 introduces a specialized MoE architecture called DeepSeekMoE, which optimizes model training efficiency and inference throughput while maintaining strong performance. This architecture refines expert selection, routing, and load balancing strategies to reduce computational overhead. Below, we detail the MoE-specific mechanisms in DeepSeek-V2, breaking them down into their individual components.

Basic Architecture of DeepSeekMoE

- DeepSeekMoE is designed with fine-grained expert segmentation and shared expert isolation, which increase specialization while reducing redundancy. The MoE architecture in DeepSeek-V2 consists of:

- Ns shared experts, which process all tokens.

- Nr routed experts, which are selectively activated for tokens based on a gating function.

- Each token is processed by a fixed number Kr of routed experts.

-

The output of the MoE layer is computed as:

ht′=ut+∑i=1NsFFNi(s)(ut)+∑i=1Nrgi,tFFNi(r)(ut)

- where:

- FFNi(s) represents a shared expert.

- FFNi(r) represents a routed expert.

- gi,t is the gating function, determining expert selection for token t.

- where:

-

The gating function follows:

gi,t={si,t,si,t∈Top-Kr({sj,t∣1≤j≤Nr})0,otherwise

- where si,t is the softmax-weighted token-expert affinity:

si,t=Softmaxi(utTei)

- where ei is the centroid of expert i.

Device-Limited Routing

- One of the major computational bottlenecks in MoE models is the communication overhead introduced by expert parallelism. To address this, DeepSeekMoE implements device-limited routing, restricting the number of devices a token’s experts can be distributed across.

- Key implementation details:

- Each token first selects M devices with the highest affinity scores.

- The final Kr experts are chosen only from these selected devices.

- In practice, setting M≥3 ensures performance close to unrestricted routing while significantly reducing inter-device communication.

Auxiliary Loss for Load Balancing

-

DeepSeek-V2 employs multiple auxiliary losses to ensure balanced expert utilization, avoiding situations where certain experts become overloaded while others remain underutilized. Specifics below:

-

Expert-Level Balance Loss:

- To prevent routing collapse, where only a subset of experts get trained, DeepSeek-V2 minimizes:

LExpBal=α1∑i=1NrfiPi

- where:

- fi is the fraction of tokens routed to expert i,

- Pi is the average probability of selecting expert i,

- α1 is a hyperparameter controlling the strength of the loss.

-

Device-Level Balance Loss:

- To distribute computation evenly across devices, DeepSeekMoE assigns experts to D device groups, where each group runs on a separate device. The balance loss is:

LDevBal=α2∑i=1Dfi′Pi′

- where fi′ and Pi′ aggregate usage statistics across all experts on device i.

-

Communication Balance Loss:

- This loss ensures that each device receives an approximately equal number of tokens, preventing bottlenecks caused by excessive communication loads:

LCommBal=α3∑i=1Dfi″Pi″

- where fi″ and Pi″ measure the fraction of tokens sent to device i.

-

Token-Dropping Strategy

- While auxiliary losses improve balance, they cannot strictly guarantee uniform expert utilization. To further mitigate inefficiencies, DeepSeek-V2 implements a token-dropping strategy at the device level:

- The computational budget per device is first estimated.

- Tokens with the lowest affinity scores are dropped until the budget is met.

- At least 10% of training sequences are exempted from token dropping to ensure diversity.

- This approach allows flexibility in dynamically adjusting token retention during inference based on computational constraints.

Enhancements in DeepSeek-V3

- DeepSeek-V3 introduces several significant improvements to the MoE framework compared to DeepSeek-V2. These enhancements primarily focus on increasing model efficiency, reducing training and inference costs, and maintaining high performance. The key improvements include an auxiliary-loss-free load balancing strategy, node-limited routing, improved expert selection mechanisms, and enhanced sparsity constraints. These advancements contribute to more efficient training, faster inference, and superior performance compared to DeepSeek-V2.

Auxiliary-Loss-Free Load Balancing

-

In contrast to DeepSeek-V2, which relies on auxiliary losses to ensure balanced expert utilization, DeepSeek-V3 introduces an auxiliary-loss-free strategy. Instead of penalizing imbalance with additional loss terms, DeepSeek-V3 dynamically adjusts expert selection using bias terms. The expert gating function is modified as follows:

gi,t′={si,t,si,t+bi∈Top-Kr({sj,t+bj 1≤j≤Nr})0,otherwise - where bi is a bias term adjusted dynamically based on the load of expert i over multiple training steps:

bi←bi−γif expert i is overloaded, otherwise bi←bi+γ.

-

This dynamic adjustment ensures that expert load remains balanced without requiring auxiliary loss penalties, leading to better training stability and efficiency.

Node-Limited Routing (NLR)

- DeepSeek-V3 introduces Node-Limited Routing (NLR) to further optimize communication overhead in large-scale MoE training. Instead of allowing tokens to be dispatched to any expert across the model, NLR restricts the number of nodes each token can communicate with. The routing mechanism selects at most M nodes per token, ensuring that experts are assigned in a way that minimizes inter-node synchronization.

| M=∑i=1Nmax{sj,t | j∈node i} |

- This approach significantly reduces cross-node communication overhead, leading to faster training and inference times.

Improved Expert Selection Mechanism

-

DeepSeek-V3 refines expert selection by incorporating a sigmoid-based token-expert affinity function instead of the softmax-based mechanism used in DeepSeek-V2. The new function is defined as:

si,t=σ(utTei)

- where ei is the centroid of expert i and σ(⋅) is the sigmoid activation function. The selection process then normalizes the top-Kr expert scores:

gi,t=gi,t′∑j∈Top-Krgj,t′.

-

This modification prevents extreme expert selection probabilities, leading to better load balancing and specialization.

Enhanced Sparsity Constraints with Hierarchical Gating

-

To avoid over-specialization and encourage generalization, DeepSeek-V3 introduces hierarchical gating. Unlike traditional top-K gating, this method applies sparsity constraints at multiple levels:

- Global Selection: Initial selection of Ng experts at a coarse level.

- Cluster-Level Pruning: Further filtering experts within selected clusters to obtain Kr experts.

- Entropy-Based Adjustments: Adjusting expert activation probabilities based on entropy constraints to avoid extreme sparsity.

-

Mathematically, the entropy-based adjustment modifies gating scores as follows:

gi,t=gi,t×(1−λ⋅H(g1:Nr,t))

- where H(⋅) is the entropy function and λ is a regularization coefficient controlling the trade-off between uniform selection and specialization.

No Token-Dropping Strategy

- DeepSeek-V2 implemented a token-dropping strategy to balance computation per device. However, DeepSeek-V3’s enhanced load-balancing mechanism eliminates the need for token dropping, ensuring 100% token retention during both training and inference. This improves generalization and avoids loss of information during model updates.

Enhancements in DeepSeek-R1

- DeepSeek-R1 introduces several major enhancements to the MoE framework that improve computational efficiency, load balancing, and inference accuracy. These enhancements build upon DeepSeek-V3’s optimizations, integrating reinforcement learning-based routing strategies, entropy-controlled gating, and fine-grained expert specialization. Below, we break down the key MoE innovations in DeepSeek-R1.

Adaptive Expert Routing with Reinforcement Learning (RL)

- DeepSeek-R1 introduces RL-based expert routing, moving away from static routing approaches used in DeepSeek-V3. Instead of selecting experts based purely on token-expert affinities computed via softmax functions, DeepSeek-R1 incorporates a learned RL policy to dynamically assign tokens to experts.

-

Mathematical Formulation:

- The expert selection function is formulated as an RL policy optimization problem, where the probability of selecting expert ei for token t is adjusted dynamically based on token embeddings ut:

gi,t=πθ(ei ut) - where πθ is the policy network that selects experts based on contextual embeddings. The optimization objective follows GRPO:

JGRPO(θ)=Eq∼P(Q),{oi}i=1G∼πθold[1G∑i=1Gmin(πθ(oi q)πθold(oi q)Ai,clip(⋅))−βDKL(πθ πref)] - where DKL regularizes the policy update to prevent drastic shifts.

- Implementation Details:

- The RL-based router learns optimal token assignments by maximizing expert load balancing and minimizing routing entropy.

- It penalizes overloading of specific experts while incentivizing uniform activation across layers.

- Dynamic bias terms are introduced into the routing function to further modulate expert selection in response to training feedback.

- This approach enables adaptive token-expert mapping, optimizing inference speed while maintaining accuracy.

Hierarchical Entropy-Gated MoE (HE-MoE)

-

DeepSeek-R1 enhances top-K MoE routing by introducing Hierarchical Entropy-Gated MoE (HE-MoE). Instead of applying a single top-K gating function at the token level, DeepSeek-R1 implements a multi-level gating mechanism:

- Global Selection: Tokens are first routed to an initial pool of Ng experts using softmax affinity scoring.

- Cluster-Level Pruning: Within the selected pool, a secondary gating mechanism prunes experts based on entropy constraints.

- Final Expert Assignment: Top-Kr experts are chosen using an adjusted probability function that incorporates an entropy-aware penalty.

-

The final gating function is modified as:

gi,t=Softmaxi(utTei)1+λH(g1:Nr,t)

- where H(⋅) is the entropy function, and λ controls the regularization strength.

-

Key Benefits:

- Prevents expert over-specialization by ensuring that tokens are distributed more evenly.

- Reduces mode collapse where certain experts dominate training.

- Dynamically scales sparsity by adjusting gating thresholds based on task complexity.

Device-Constrained Expert Allocation (DCEA)

-

DeepSeek-R1 improves upon DeepSeek-V3’s node-limited routing by incorporating Device-Constrained Expert Allocation (DCEA), which restricts expert assignments based on GPU/TPU availability and interconnect bandwidth.

-

Algorithm:

- Each token first selects a subset of devices with the highest affinity scores.

- Experts are restricted to these devices, reducing inter-device synchronization overhead.

- The final experts are selected only within the constrained device pool, minimizing cross-node communication.

M=∑i=1Nmax{sj,t j∈device i} -

Results:

- 35% reduction in cross-device communication latency.

- More stable training dynamics, as experts remain on localized compute nodes.

- Lower bandwidth consumption, improving training efficiency.

Load-Balanced Expert Utilization with RL-Based Adjustments

- To ensure uniform load balancing, DeepSeek-R1 introduces adaptive load-based routing adjustments, replacing DeepSeek-V3’s auxiliary loss-based balancing strategy.

- Instead of explicitly minimizing an expert balance loss term, DeepSeek-R1 dynamically adjusts gating probabilities using an RL-based expert selection bias:

bi←bi−γif expert i is overloaded, otherwise bi←bi+γ.

- Advantages Over Auxiliary Losses:

- Faster convergence, as it avoids additional gradient updates for balance constraints.

- More robust expert selection, as it adapts over multiple training steps.

- This ensures consistent workload distribution without requiring hard auxiliary penalties.

Elimination of Token-Dropping Strategy

- Unlike DeepSeek-V3, which used token dropping to balance computation per device, DeepSeek-R1 completely eliminates token-dropping by optimizing expert activation thresholds dynamically.

- Instead of removing low-affinity tokens, DeepSeek-R1 reallocates tokens to alternative experts using a reinforcement-learning-based expert reassignment strategy.

- Benefits:

- 100% token retention during training and inference.

- Stronger generalization since all tokens contribute to learning.

- No loss of contextual information, leading to more coherent completions.

Comparative Analysis

- DeepSeek-R1 represents the most advanced iteration of the MoE framework, building upon the optimizations introduced in DeepSeek-V2 and DeepSeek-V3. Below, we compare key MoE features across these three versions, highlighting improvements in efficiency, expert routing, load balancing, and inference performance.

| Feature | DeepSeek-V2 | DeepSeek-V3 | DeepSeek-R1 |

|---|---|---|---|

| Dynamic Expert Activation | ❌ | ✅ (Bias-based selection) | ✅ (RL-based selection) |

| Device-Limited Routing (DLR) | ✅ | ✅ (Node-Limited Routing) | ✅ (Device-Constrained Expert Allocation) |

| Auxiliary Loss for Load Balancing | ✅ | ❌ (Bias-based adjustments) | ❌ (RL-based adaptive balancing) |

| RL-Based Routing | ❌ | ❌ | ✅ |

| Hierarchical Gating for Expert Selection | ❌ | ✅ | ✅ (Entropy-aware adjustment) |

| Improved Expert Selection Mechanism | ❌ | ✅ (Sigmoid-based) | ✅ (RL-optimized selection) |

| Cross-Device Communication Reduction | ✅ (Device-limited routing) | ✅ (Node-limited routing) | ✅ (35% lower latency with DCEA) |

| Token Dropping for Computational Efficiency | ✅ | ❌ (No token dropping) | ❌ (No token dropping) |

| Sparse Activation Strategy | ✅ (Top-K gating) | ✅ (Hierarchical Top-K gating) | ✅ (Hierarchical Entropy-Gated MoE) |

| Training Stability | Moderate | High | Very High |

| Inference Speed Optimization | Moderate | High | Very High |

| Load Balancing Strategy | Loss-based balancing | Bias-based adaptive balancing | RL-based adaptive balancing |

Mathematical Formulation

-

The expert selection process in DeepSeek-R1 follows a gating function:

G(x)=softmax(Wgx)

- where Wg is a trainable weight matrix.

-

The final output is computed as:

y=∑k∈KGk(x)Ek(x)

- where:

- K represents the top-K selected experts.

- Ek(x) is the computation performed by expert k.

- Gk(x) is the gating probability.

- where:

Load Balancing Loss

-

To ensure equal utilization of experts, DeepSeek-R1 applies a load balancing loss:

Lbalance=λ∑k(nkN−1K)2

- where:

- nk is the number of tokens assigned to expert k.

- N is the total number of tokens in a batch.

- K is the number of active experts per token.

- where:

-

Additionally, an entropy regularization term prevents expert over-reliance:

Lentropy=−γ∑kGk(x)logGk(x)

- where γ controls entropy strength.

Multihead Latent Attention (MLA)

Overview

- Multihead Latent Attention (MLA) enhances efficiency by projecting Key-Query-Value (KQV) matrices into a lower-dimensional latent space, significantly reducing computational and memory costs.

- Low-rank compression techniques in MLA minimize the storage overhead of the Key-Value (KV) cache, ensuring faster inference and supporting longer context lengths or larger batch sizes.

- DeepSeek-R1 refines MLA further by incorporating RL-enhanced reasoning optimizations while maintaining low memory overhead.

- By utilizing decoupled rotary positional embeddings and latent-space compression, MLA ensures minimal accuracy degradation while maintaining computational efficiency.

Key Features

-

Low-Rank Key-Value Compression: MLA employs a low-rank latent space projection to compress KV pairs, significantly reducing memory overhead. This allows DeepSeek-R1 to store only compressed representations instead of full-dimensional KV states, enabling efficient long-context processing.

-

Decoupled Rotary Position Embedding (RoPE): Standard RoPE introduces position-dependent transformations that hinder KV compression. DeepSeek-R1 decouples RoPE from key-value storage, ensuring positional encodings remain effective without interfering with latent-space efficiency.

-

Efficient Multihead Attention with Compressed Storage: Instead of caching full key-value matrices for all tokens, MLA only stores their compact latent-space equivalents. This drastically reduces inference memory requirements while maintaining attention fidelity.

-

Adaptive Projection Matrices: MLA leverages separate, learned projection matrices for queries, keys, and values. These matrices dynamically adjust during training, ensuring optimal storage efficiency and minimal accuracy loss compared to full-dimensional attention.

-

Inference-Efficient Cache Mechanism: By selectively caching only compressed key-value representations, MLA achieves a 93.3% KV cache reduction over traditional Multi-Head Attention (MHA). This allows DeepSeek-R1 to support longer context lengths while minimizing inference latency.

-

Enhanced Performance on Long-Context Tasks: DeepSeek-R1 refines MLA with RL-driven optimizations, such as GRPO, to prioritize critical tokens. This improves reasoning accuracy in long-context tasks while preserving computational efficiency.

Evolution from DeepSeek-V2 to DeepSeek-R1

MLA in DeepSeek-V2

- MLA in DeepSeek-V2 was designed to enhance inference efficiency by significantly reducing the KV cache size while maintaining strong model performance. It introduced several key innovations over traditional Multi-Head Attention (MHA), including low-rank key-value joint compression and decoupled rotary position embedding.

- The MLA implementation in DeepSeek-V2 laid the foundation for further improvements in DeepSeek-R1, where it was further refined with FP8 quantization, enhanced compression techniques, and improved numerical stability.

Low-Rank Key-Value Joint Compression

-

One of the primary bottlenecks in transformer inference is the large KV cache required to store past keys and values. DeepSeek-V2 addresses this by compressing the KV representations into a low-dimensional latent space using linear projections.

-

Given an input token representation ht∈Rd, standard multi-head attention computes queries, keys, and values as:

qt=WQht,kt=WKht,vt=WVht

where WQ,WK,WV∈Rdhnh×d.

-

Instead of storing full-dimension kt and vt, MLA compresses them into a latent representation cKV:

cKVt=WDKVht

where WDKV∈Rdc×d is a down-projection matrix, and dc≪dhnh.

-

During inference, the compressed key-value representation is expanded back into usable keys and values:

ktC=WUKcKVt,vtC=WUVcKVt

where WUK,WUV∈Rdhnh×dc are up-projection matrices.

This compression reduces the KV cache size from O(nhdhl) to O(dcl), where l is the number of layers.

Decoupled Rotary Position Embedding

-

RoPE is commonly used in transformer architectures to encode positional information into queries and keys. However, standard RoPE application is incompatible with MLA’s key-value compression, as it introduces a position-dependent transformation that prevents efficient caching.

-

DeepSeek-V2 resolves this by decoupling RoPE from key compression:

- Introduce an auxiliary shared key ktR and additional multi-head queries qtR.

-

Apply RoPE only to qtR and ktR:

qtR=RoPE(WQRcQt),ktR=RoPE(WKRht)

- where WQR,WKR are projection matrices specific to decoupled RoPE.

-

Concatenate compressed and RoPE-applied keys/queries:

qt=[qtC;qtR],kt=[ktC;ktR]

- ensuring that RoPE affects only a subset of the attention mechanism while keeping key-value compression intact.

Comparison of KV Cache Requirements

- A key benefit of MLA is that it achieves stronger performance than standard MHA while requiring significantly less KV cache. The table below compares the cache sizes across different attention mechanisms:

| Attention Mechanism | KV Cache per Token (Elements) |

|---|---|

| MHA | 2nhdhl |

| GQA (Grouped Query) | 2ngdhl |

| MQA (Multi-Query) | 2dhl |

| MLA (DeepSeek-V2) | (dc+dhR)l |

-

For DeepSeek-V2, values were set as: dc=4dh dhR=dh/2

-

This means that MLA achieves similar efficiency to GQA with 2.25 groups, while maintaining the performance level of MHA.

Enhancements in DeepSeek-V3

-

DeepSeek-V3 introduces several key enhancements to Multihead Latent Attention (MLA) that significantly improve its efficiency, scalability, and precision while maintaining high model accuracy. The major improvements include:

- Further KV Cache Reduction through Optimized Compression Techniques

- Query Compression for Activation Memory Savings

- Enhanced Numerical Stability with FP8 Mixed Precision

- Adaptive Routing for Load Balancing in MLA

-

With these improvements, DeepSeek-V3 reduces memory overhead, enhances numerical precision, and achieves significantly faster inference speeds while maintaining high model accuracy.

Further KV Cache Reduction Through Optimized Compression Techniques

-

One of the major enhancements in DeepSeek-V3’s MLA is the more aggressive compression of the KV cache while preserving model performance. This is achieved through:

- Dynamic KV Compression Matrices: Instead of static compression matrices, DeepSeek-V3 optimizes the compression dynamically per sequence length.

- Factorized Projections for KV Storage: A dual-matrix decomposition is applied to down-project the keys and values, further reducing KV storage.

Optimized Compression Formulation

-

Given an input token representation ht∈Rd, standard MLA in DeepSeek-V2 computed compressed KV representations as:

cKVt=WDKVht

- where WDKV∈Rdc×d was a static down-projection matrix.

-

In DeepSeek-V3, the compression process is enhanced with an adaptive dual-matrix compression:

cKVt=WDKV,1WDKV,2ht

- where WDKV,1∈Rdm×d and WDKV,2∈Rdc×dm, with dm being an intermediate dimensionality. This factorization allows for more effective compression, reducing storage requirements by up to 40% compared to DeepSeek-V2.

Inference-Time Expansion

-

During inference, the expanded keys and values are now computed as:

ktC=WUKWMKcKVt,vtC=WUVWMVcKVt

- where WMK,WMV serve as intermediary projection layers that refine the KV reconstruction process.

-

This improvement ensures that only compressed vectors are stored in memory, significantly reducing KV cache overhead.

Query Compression for Activation Memory Savings

-

DeepSeek-V3 extends MLA’s low-rank compression to queries, reducing activation memory requirements without affecting attention precision.

-

Query Compression Formulation:

- Instead of computing full queries:

qt=WQht,kt=WKht,vt=WVht

-

DeepSeek-V3 introduces an additional compression step:

cQt=WDQht,qtC=WUQcQt

- where:

- cQt∈Rdc′ is the compressed query representation.

- dc′≪dhnh, ensuring significantly lower activation memory usage.

- where:

-

Decoupled Rotary Positional Embedding (RoPE):

-

To maintain the effectiveness of positional embeddings, DeepSeek-V3 decouples Rotary Positional Embedding (RoPE) application:

qtR=RoPE(WQRcQt),ktR=RoPE(WKRht)

- where:

- qtR and ktR store RoPE-applied versions of the compressed representations.

- This prevents RoPE from interfering with MLA’s low-rank compression.

- where:

-

Reduction in Activation Memory

- With query compression, DeepSeek-V3 reduces attention activation memory by 35%, enabling efficient training on large-scale models.

Enhanced Numerical Stability with FP8 Mixed Precision

-

DeepSeek-V3 leverages FP8 mixed precision training, improving numerical stability while reducing memory and computational costs.

-

FP8 Training for MLA Components:

-

In DeepSeek-V2, the MLA components operated primarily in BF16. DeepSeek-V3 instead adopts fine-grained FP8 quantization, applying a per-group scaling strategy:

- Activation Scaling: Per-token, per-128-channel tile quantization for activations.

- Weight Scaling: 128×128 block-wise scaling for weights.

-

This ensures reduced rounding errors and better dynamic range coverage for training.

-

-

FP8 Attention Computation:

-

The attention output in DeepSeek-V3 is computed using FP8-compatible scaling:

ot=∑j=1tSoftmax(qtTkjdh+dR)vj

- where:

- The scaling factor is calculated online for activations.

- The accumulation is upgraded to FP32 every 128 steps to improve numerical precision.

- where:

-

-

Precision Comparison:

| Component | DeepSeek-V2 (BF16) | DeepSeek-V3 (FP8) |

|---|---|---|

| Query/Key Compression | dc=4dh | dc=3dh |

| KV Cache Storage | BF16 | FP8 |

| RoPE Application | Full Precision | Decoupled, FP8 |

| Attention Computation | BF16 | FP8 + FP32 Accumulation |

- By leveraging FP8 quantization, DeepSeek-V3 achieves 2.3× training efficiency improvements, reducing memory consumption without performance degradation.

Adaptive Routing for Load Balancing in MLA

-

DeepSeek-V3 improves attention efficiency by introducing dynamic load balancing for query-key computation.

-

Load-Adaptive Routing Mechanism:

-

In DeepSeek-V2, MLA used static attention head assignments, leading to occasional computational inefficiencies when processing large sequences.

-

DeepSeek-V3 refines this with adaptive routing:

si,t=Sigmoid(utTei+bi)

- where:

- ei is the centroid vector of the routed expert.

- bi is a dynamically updated bias term that adjusts for per-head workload balance.

- where:

-

The bias term updates as:

bi(t+1)=bi(t)−γ⋅(overloadedi−underloadedi)

- where γ is a tuning parameter.

-

This ensures:

- Balanced token distribution across attention heads.

- No token-dropping during inference, preventing efficiency loss.

-

-

Computational Gains:

- By integrating adaptive routing, DeepSeek-V3 achieves:

- Uniform computational load across attention heads.

- 10% reduction in per-token inference latency.

- By integrating adaptive routing, DeepSeek-V3 achieves:

Enhancements in DeepSeek-R1

- DeepSeek-R1 introduces several refinements to MLA, improving reasoning efficiency and inference performance while maintaining low memory overhead. Building upon the MLA optimizations in DeepSeek-V3, DeepSeek-R1 further enhances KQV compression, RL-guided attention allocation, and numerical stability mechanisms.

RL-Guided Latent Attention Optimization

- DeepSeek-R1 integrates RL techniques into MLA, optimizing attention mechanisms through GRPO. Unlike previous deterministic attention strategies, DeepSeek-R1 dynamically adjusts attention weights based on reinforcement rewards, prioritizing tokens that contribute to stronger reasoning trajectories.

- GRPO eliminates the need for a separate critic model, reducing memory overhead and improving convergence efficiency.

- Instead of relying on supervised fine-tuning, GRPO estimates advantage values directly from group-level rewards:

Ai=ri−mean({r1,r2,…,rG})std({r1,r2,…,rG})

- The policy model πθ is updated by maximizing:

| JGRPO(θ)=E[∑i=1Gmin(πθ(oi | q)πθold(oi | q)Ai,clip(πθ(oi | q)πθold(oi | q),1−ϵ,1+ϵ)Ai)−βDKL(πθ | πref)] |

- This approach allows DeepSeek-R1 to adaptively refine the attention mechanisms in MLA, improving token prioritization in long-context reasoning.

- Further details can be found in the section on RL Algorithm: Group Relative Policy Optimization (GRPO).

Adaptive Query and Key Compression Via RL

One of the primary enhancements in DeepSeek-R1’s MLA is RL-guided adaptive query and key compression. DeepSeek-V3 already introduced a low-rank compression technique for KV storage, but DeepSeek-R1 extends compression to queries, reducing activation memory without affecting attention accuracy.

-

Optimized Compression Formulation:

- In DeepSeek-V3, the KV cache compression was achieved using static low-rank projections:

cKVt=WDKVht

-

DeepSeek-R1 dynamically adjusts compression matrices during inference using RL-based reward maximization:

cKVt=WDKV,1WDKV,2ht

- where:

- WDKV,1∈Rdm×d and WDKV,2∈Rdc×dm.

- dm is an intermediate dimensionality, allowing for more fine-grained latent space representations.

- where:

-

Inference-Time Expansion:

-

Instead of using a single up-projection matrix, DeepSeek-R1 incorporates a multi-stage expansion pipeline:

ktC=WUKWMKcKVt,vtC=WUVWMVcKVt

- where WMK,WMV refine the reconstructed query-key values, ensuring that only compressed vectors are stored in memory.

-

-

Compression ratio improvements: DeepSeek-R1 reduces KV cache requirements by an additional 25% over DeepSeek-V3, while maintaining query-key retrieval accuracy.

Decoupled Rotary Position Embedding with Context-Specific Scaling

- While DeepSeek-V3 introduced Decoupled RoPE to separate positional encoding from compressed key-value representations, DeepSeek-R1 further refines RoPE with context-specific scaling mechanisms.

-

DeepSeek-R1 adopts an enhanced RoPE formulation where RoPE is context-aware, dynamically adjusting scaling factors based on sequence length:

λt=11+αLt

- where:

- λt is the adaptive scaling factor for positional embedding.

- α is a hyperparameter learned via RL optimization.

- Lt represents the sequence length at time step t.

- where:

- Implementation benefits:

- RoPE scaling ensures consistent attention alignment across varying sequence lengths.

- Prevents positional information degradation when compressing MLA’s key-value states.

FP8 Mixed Precision for MLA Stability

- DeepSeek-R1 adopts FP8 quantization for MLA computations, further improving numerical stability over DeepSeek-V3’s BF16-based approach.

-

In DeepSeek-R1’s precision-aware computation pipeline, QKV matrices are quantized dynamically using per-group scaling:

Q~=QsQ,K~=KsK,V~=VsV

- where sQ,sK,sV are learned per-group scaling factors.

-

The attention output is computed with hybrid precision accumulation:

ot=∑j=1tSoftmax(q~tTk~jdh+dR)v~j

-

The accumulation process is upgraded to FP32 every 128 steps, ensuring better numerical precision while maintaining FP8 efficiency.

- Comparison of MLA Precision Strategies:

| Component | DeepSeek-V3 (BF16) | DeepSeek-R1 (FP8) |

|---|---|---|

| Query/Key Compression | dc=4dh | dc=3dh |

| KV Cache Storage | BF16 | FP8 |

| RoPE Application | Full Precision | Decoupled, FP8 |

| Attention Computation | BF16 | FP8 + FP32 Accumulation |

- Efficiency improvements:

- FP8 reduces memory footprint by ~40% compared to BF16.

- Enables 2.3× faster inference throughput for long-context tasks.

Adaptive/Dynamic Routing for Load-Balanced Attention

- DeepSeek-R1 incorporates load-balancing adaptive routing mechanisms, ensuring uniform query-key computation across attention heads.

-

DeepSeek-R1 optimizes per-head workload balance using a sigmoid-based routing function:

si,t=Sigmoid(utTei+bi)

- where:

- ei represents the centroid vector of the routed attention expert.

- bi is an adaptive bias term, ensuring workload uniformity.

- where:

- Performance gains:

- Balanced computation across heads prevents bottlenecks.

- Reduces per-token inference latency by 10%.

Comparative Analysis

- DeepSeek-V2 introduced Multihead Latent Attention (MLA) with significant KV cache compression, decoupled RoPE, and basic low-rank projections for efficiency. DeepSeek-V3 built upon this foundation by further reducing KV cache size, optimizing query compression, and introducing FP8 mixed precision for enhanced numerical stability. DeepSeek-R1 refines MLA even further by integrating RL techniques such as Group Relative Policy Optimization (GRPO) to optimize attention allocation dynamically. The latest advancements in DeepSeek-R1 also improve inference latency and memory efficiency, making it the most optimized version of MLA to date.

- The table below provides a comparative analysis of DeepSeek-V2, DeepSeek-V3, and DeepSeek-R1 for MLA. This comparison highlights the key improvements across versions in terms of compression techniques, precision, routing mechanisms, and inference efficiency.

| Feature | DeepSeek-V2 | DeepSeek-V3 | DeepSeek-R1 |

|---|---|---|---|

| Low-Rank KV Compression | ✅ | ✅ (Optimized with Factorized Projections) | ✅ (RL-Optimized Adaptive Compression) |

| Query Compression | ❌ | ✅ (Static Low-Rank Query Compression) | ✅ (RL-Guided Dynamic Query Compression) |

| KV Cache Reduction | ✅ (93.3% Reduction) | ✅ (40% Further Reduction) | ✅ (25% Further Reduction over V3) |

| RoPE Application | ✅ (Decoupled RoPE) | ✅ (Decoupled with Context-Specific Scaling) | ✅ (Enhanced Context-Aware Scaling) |

| Precision Format | BF16 | FP8 (Fine-Grained Mixed Precision) | FP8 (Per-Group Scaling, FP32 Accumulation) |

| Adaptive Routing for MLA | ❌ | ✅ (Static Adaptive Routing) | ✅ (Load-Balanced Dynamic Routing) |

| Inference Latency Reduction | ✅ (KV Compression Reduces Latency) | ✅ (10% Faster than V2) | ✅ (10% Faster than V3) |

| RL Enhancements | ❌ | ❌ | ✅ (GRPO for Adaptive MLA Optimization) |

| Numerical Stability Improvements | ✅ (Basic Stability Enhancements) | ✅ (FP8 with Mixed Precision) | ✅ (FP8 with RL-Guided Stability Mechanisms) |

| Long-Context Performance | ✅ (Supports Longer Contexts) | ✅ (Further Optimized) | ✅ (Enhanced with RL-Guided Token Prioritization) |

Implementation

- The implementation of MLA in DeepSeek-R1 incorporates several optimizations aimed at maximizing efficiency while preserving accuracy. This section details the core mechanisms underlying MLA, including key-value compression, query transformation, position encoding, and computational optimizations.

Background: Standard Multi-Head Attention (MHA)

-

For a standard multi-head attention (MHA) mechanism, the Key (K), Query (Q), and Value (V) matrices are computed as follows:

K,Q,V=WkX,WqX,WvX

- where Wk,Wq,Wv are weight matrices for key, query, and value projections.

-

The attention weights are computed as:

A=Softmax(QKTdk)

- and the output is given by:

O=AV

-

This requires storing the full key-value cache during inference, leading to significant memory overhead.

Low-Rank Key-Value Joint Compression

-

One of the fundamental optimizations in MLA is the compression of KV pairs into a lower-dimensional latent space, significantly reducing memory overhead. Specifics below:

-

Compression Mechanism:

- The key and value representations are compressed into a shared latent space before being projected back into their respective dimensions. This is achieved through a two-step transformation:

cKVt=WDKVht

kCt=WUKcKVt,vCt=WUVcKVt

- where:

- cKVt∈Rdc is the compressed latent representation.

- WDKV∈Rdc×d is a down-projection matrix.

- WUK,WUV∈Rdhnh×dc are up-projection matrices for keys and values, respectively.

-

Memory Reduction:

- Instead of storing full-sized keys and values for each token, only cKVt is cached.

- The reduction in memory footprint allows DeepSeek-R1 to process significantly longer sequences at a lower computational cost.

-

Multi-Stage Compression

-

DeepSeek-R1 refines the compression mechanism by introducing an additional transformation layer, leading to a multi-stage compression approach. Specifics below:

-

Additional Projection Layer:

- To further minimize storage costs, a secondary compression layer is introduced:

cKVt′=WDKV2f(WDKVht)

- where:

- WDKV2∈Rdc′×dc is a second down-projection matrix.

- f(⋅) is a non-linear activation function applied to improve representation learning.

- dc′<dc ensures an even smaller KV cache size.

-

Performance Benefits:

- This additional step further reduces KV storage while maintaining sufficient information for attention mechanisms.

- Experiments indicate that this leads to a 10-15% reduction in memory footprint compared to DeepSeek-V3.

-

Query Compression and Optimization

-

Similar to keys and values, queries are also compressed, allowing for efficient computation and reduced activation memory during training. Specifics below:

-

Query Transformation:

- Queries undergo a two-step transformation similar to keys and values:

cQt=WDQht

qCt=WUQcQt

- where:

- WDQ∈Rdc′×d is a down-projection matrix for queries.

- WUQ∈Rdhnh×dc′ maps the compressed query representation back to its original dimensionality.

-

Multi-Layer Query Refinement:

- DeepSeek-R1 optimizes query projection through additional adaptive scaling layers.

- The transformation matrices WDQ and WUQ are dynamically adjusted during fine-tuning using RL.

-

Decoupled Rotary Position Embedding (RoPE)

-

To ensure robust long-context handling, DeepSeek-R1 applies RoPE in a decoupled manner, separating positional encodings from the latent attention mechanism. Specifics below:

-

Independent Positional Encoding for Keys and Queries:

kRt=RoPE(WKRht)

qRt=RoPE(WQRcQt)

- where:

- WKR∈RdRh×d generates positional embeddings for keys.

- WQR∈RdRhnh×dc′ generates positional embeddings for queries.

- The RoPE transformation ensures that relative positional information is preserved while allowing the KV cache to remain compact.

- where:

-

Computation Efficiency of RoPE in DeepSeek-R1:

- RoPE application is delayed until the final stages of query-key interaction, preventing unnecessary memory bloat.

- Compared to DeepSeek-V2 and V3, DeepSeek-R1 achieves 25% faster query-key retrieval.

-

Attention Computation in MLA

-

The final attention output in MLA is computed by integrating compressed keys, queries, and values in a modified attention mechanism. Specifics below:

- Modified Attention Scores:

-

The attention scores are computed using both compressed latent keys and explicit positional encodings:

At,j,i=qt,iTkj,idh+dR

-

This formulation ensures that positional embeddings contribute proportionally to attention strength.

-

- Weighted Value Aggregation:

-

The attention output is computed as:

ot,i=∑j=1tSoftmaxj(At,j,i)vCj,i

-

The softmax operation normalizes the attention scores across the sequence.

-

- Final Output Projection:

-

The final output is obtained via:

ut=WO[ot,1;ot,2;…;ot,nh]

- where:

- WO is the output projection matrix mapping the concatenated attention outputs back to the full embedding space.

- where:

-

- Modified Attention Scores:

RL-Optimized MLA

-

DeepSeek-R1 incorporates RL to further optimize MLA’s transformation matrices. Specifics below:

- Fine-Tuning with RL:

- Using GRPO, MLA is rewarded based on efficient memory usage and retrieval accuracy.

-

The policy update equation is:

JGRPO(θ)=E[∑i=1Gmin(πθ(oi q)πθold(oi q)Ai,clip(πθ(oi q)πθold(oi q),1−ϵ,1+ϵ)Ai)] - where:

- πθ represents the updated policy.

- Ai is the advantage function guiding optimization.

- where:

- Empirical Results of RL Optimization:

- RL-based fine-tuning further enhances attention fidelity without increasing memory usage.

- Empirical evaluation shows a 6% improvement in retrieval accuracy over DeepSeek-V3.

- Fine-Tuning with RL:

Computational and Hardware Optimization

- Inference-Time Efficiency:

- MLA in DeepSeek-R1 is implemented with tensor-parallelized computations, optimizing throughput across GPUs.

- Memory overhead is minimized through low-precision KV storage (FP8 format).

- Cross-Node Communication Optimization:

- Uses optimized all-to-all communication kernels to fully utilize InfiniBand (IB) and NVLink bandwidths.

- Reduces inter-node communication latency by 30%, improving distributed inference performance.

Comparative Efficiency Analysis

| Attention Mechanism | KV Cache Per Token | Computational Complexity | Performance Impact |

|---|---|---|---|

| MHA (Standard) | O(Ndh) | O(N2dh) | High Accuracy, High Cost |

| MQA | O(dh) | O(Ndh) | Lower Memory, Degraded Performance |

| GQA | O(gdh) (groups) | O(Ndh) | Moderate Balance |

| MLA (DeepSeek-V2) | O(dL) | O(NdL) | High Efficiency, Minimal Loss |

| MLA + Hierarchical Caching (DeepSeek-R1) | O(dL) (with reuse) | O(NdL) | Peak Efficiency, Retains Performance |

Multi-Token Prediction (MTP)

Overview

- Multi-Token Prediction (MTP) allows DeepSeek-R1 to predict multiple tokens in parallel, significantly improving inference speed.

Key Features

-

Parallel Multi-Token Prediction: DeepSeek-R1 enhances inference speed by predicting multiple tokens simultaneously rather than sequentially. This reduces decoding latency and allows for faster text generation without compromising coherence.

-

Cross-Depth Residual Connections: Unlike DeepSeek-V3, which conditions token predictions only on prior module outputs, DeepSeek-R1 integrates residual connections between MTP layers. This allows deeper MTP modules to utilize features from earlier depths, improving long-term dependencies.

-

Adaptive Prediction Granularity: The model dynamically adjusts how many future tokens each module predicts based on the input sequence’s complexity. This ensures fine-grained predictions for short contexts and broader lookahead when handling longer sequences.

-

Depth-Aware Loss Weighting: DeepSeek-R1 refines its training objective by prioritizing mid-range MTP depths using a sigmoid-based weighting function. This enhances learning efficiency by directing more gradient updates where they have the greatest impact.

-

Memory-Efficient Parameter Sharing: The model reduces memory consumption by reusing transformer layers across MTP depths. Instead of separate layers for each module, DeepSeek-R1 applies depth-conditioned routing, minimizing redundant computations while maintaining unique depth-wise representations.

-

Optimized Speculative Decoding: DeepSeek-R1 improves speculative decoding by introducing probabilistic agreement checking. Predictions are accepted based on confidence thresholds rather than requiring exact matches, reducing rejection rates and accelerating inference.

-

Empirical Gains in Training and Inference: Thanks to these enhancements, DeepSeek-R1 achieves a 22% faster training convergence, 1.5× improvement in generation speed, and 18% better long-form perplexity, demonstrating its superiority over DeepSeek-V3.

Evolution from DeepSeek-V3 to DeepSeek-R1

MTP in DeepSeek-V3

- MTP was is introduced in DeepSeek-V3 as a training objective to improve data efficiency and predictive capabilities by enabling the model to anticipate multiple future tokens at each position. Unlike conventional next-token prediction, which limits training to a single-step forward prediction, MTP extends this scope to multiple future tokens, thereby densifying training signals and enhancing long-term coherence in text generation.

- DeepSeek-V3 implements MTP using a structured pipeline with several key design choices, including sequential prediction modules, shared embeddings and output heads, and a hierarchical loss formulation. These innovations improve model performance, enable speculative decoding, and enhance overall data efficiency. DeepSeek-R1 further builds on these foundations, optimizing MTP implementation for improved reasoning tasks.

- The following sub-sections detail the features introduced in DeepSeek-V3 to support MTP.

Sequential Multi-Token Prediction Modules

- DeepSeek-V3 employs D sequential MTP modules, where each module is responsible for predicting an additional future token. Instead of parallelly predicting future tokens with independent output heads (as in Better & Faster Large Language Models via Multi-token Prediction by Gloeckle et al., 2024), DeepSeek-V3 maintains a causal chain across prediction depths, ensuring each token is conditioned on prior MTP module outputs.

-

For the kth MTP module, the representation of the ith input token at depth k is computed as:

hi′(k)=Mk[RMSNorm(hi(k−1));RMSNorm(Emb(ti+k))]

- where:

- hi(k−1) is the representation from the previous depth (or from the main model when k=1).

- Mk∈Rd×2d is the projection matrix.

- Emb(⋅) is the shared embedding function.

- where:

-

Each module applies a transformer block:

h1:T−k(k)=TRMk(h1:T−k′(k))

- where T is the input sequence length. The output of this module is passed to a shared output head:

Pi+k+1(k)=OutHead(hi(k))

- where Pi+k+1(k) is the probability distribution for the k-th future token.

MTP Training Objective

-

For each prediction depth k, DeepSeek-V3 computes a cross-entropy loss:

LMTP(k)=−1T∑i=2+kT+1logPi(k)[ti]

- where ti is the ground-truth token at position i, and Pi(k)[ti] is the predicted probability for that token. The overall MTP loss is the mean of losses across all depths, scaled by a factor λ:

LMTP=λD∑k=1DLMTP(k)

- where D is the number of MTP modules.

Memory Optimization with Shared Embeddings and Output Heads

- To minimize additional memory costs from MTP modules, DeepSeek-V3:

- Shares embeddings across MTP modules.

- Uses a single shared output head instead of independent ones for each MTP depth.

- Implements weight sharing between the primary model and MTP modules.

- This design ensures that additional forward passes in MTP training do not substantially increase parameter storage requirements.

Inference Strategy and Speculative Decoding

-

While MTP is primarily used to improve training, DeepSeek-V3 also explores the use of MTP modules for speculative decoding at inference time. The idea is to use the additional token predictions as speculative completions, similar to methods proposed in Fast Inference from Transformers via Speculative Decoding by Leviathan et al. (2023):

- The primary model predicts token ti+1 as usual.

- The first MTP module simultaneously predicts ti+2, allowing early validation of token coherence.

- If MTP predictions match beam search results, multiple tokens can be emitted at once.

-

This strategy significantly accelerates inference while maintaining output fluency.

Ablation Studies on Multi-Token Prediction

- DeepSeek-V3 conducts detailed ablation studies to assess the impact of MTP. Key findings include:

- Impact on Training Efficiency: Training with MTP leads to a 15% improvement in data efficiency, allowing models to learn more per token.

- Effect on Long-Term Coherence: Models trained with MTP exhibit a higher perplexity improvement at longer sequence lengths compared to traditional next-token prediction.

- Influence on Speculative Decoding Accuracy: The inclusion of MTP modules in decoding reduces rejection rates in speculative generation by 35%, enhancing latency benefits.

Enhancements in DeepSeek-R1

- DeepSeek-R1 introduces significant advancements in MTP, building upon the structured MTP framework established in DeepSeek-V3. The improvements primarily focus on better token dependency modeling, adaptive prediction granularity, loss function refinement, memory-efficient parameter sharing, and optimized inference strategies. These enhancements enable DeepSeek-R1 to achieve superior reasoning capability, enhanced training efficiency, and significantly reduced inference latency. Below, we detail each feature.

Improved Token Dependency Modeling in MTP

-

DeepSeek-R1 enhances the sequential nature of MTP modules by incorporating cross-depth residual connections between MTP layers. Unlike DeepSeek-V3, where each MTP module strictly predicts tokens conditioned only on prior module outputs, DeepSeek-R1 introduces depth-wise feature aggregation to facilitate richer information propagation.

-

The updated token representation at the k-th depth is computed as:

hi′(k)=Mk[RMSNorm(hi(k−1));RMSNorm(Emb(ti+k));Res(hi(k−2))]

- where:

-

Res(hi(k−2)) is a residual connection from two depths earlier, weighted by a learnable scalar αk:

Res(hi(k−2))=αk⋅hi(k−2)

-

- where:

-

This modification ensures that deeper MTP modules receive contextualized features from multiple depths, leading to improved coherence in multi-step predictions.

Adaptive Prediction Granularity

-

DeepSeek-R1 refines MTP’s granularity by dynamically adjusting the number of future tokens predicted per module based on the context length and complexity of the input. Instead of fixing the number of predicted tokens per step, DeepSeek-R1 adapts the prediction horizon dynamically.

-

The number of future tokens predicted at depth k is given by:

Nk=min(⌊γk⋅T⌋,D−k)

- where:

- γk is a learnable scaling factor that determines adaptive granularity.

- T is the sequence length.

- D is the maximum MTP depth.

- where:

-

Intuition: In early sequence regions, shorter horizons (1-2 future tokens) are preferred for precise token alignment, whereas deeper into the sequence, the model extends the prediction horizon, increasing efficiency without sacrificing accuracy.

Loss Function Refinement for Multi-Depth Learning

-

DeepSeek-R1 improves the MTP loss formulation by introducing depth-aware weighting to prioritize learning at certain depths. In DeepSeek-V3, all depths were weighted equally, leading to inefficient optimization at extreme depths.

-

The new depth-weighted MTP loss is formulated as:

LMTP=λD∑k=1Dwk⋅LMTP(k)

- where:

-

wk is a depth-dependent weighting factor:

wk=11+e−β(k−D/2)

-

This sigmoid-based weighting ensures that mid-range MTP depths receive stronger gradient signals, leading to better-balanced learning across depths.

-

- where:

Optimized Memory Efficiency with Parameter Sharing

-

One major enhancement in DeepSeek-R1 is the parameter sharing strategy across MTP modules, significantly reducing memory overhead while maintaining distinct depth-wise representations.

- Instead of maintaining separate transformer layers for each MTP depth as in DeepSeek-V3, DeepSeek-R1 re-uses the main model’s layers with depth-conditioned routing.

-

The token representation at depth k is now passed through a single, shared transformer layer with an additional depth-embedding:

h1:T−k(k)=TRM(h1:T−k′(k),DepthEmb(k))

- The depth embedding DepthEmb(k) ensures that different MTP layers retain unique learned behaviors while leveraging the same computational graph.

Enhanced Inference Strategy with Speculative Decoding

-

DeepSeek-R1 significantly refines the speculative decoding strategy introduced in DeepSeek-V3 by allowing adaptive token validation. Specifics below:

- In DeepSeek-V3, speculative decoding was limited to greedy agreement checking, where only exact matches between MTP predictions and main model outputs were used to accelerate inference.

-

DeepSeek-R1 introduces probabilistic agreement checking, where a predicted token t^i+2 from MTP is accepted if:

PMTP(1)(t^i+2)>τPMain(t^i+2)

- where:

- PMTP(1)(t^i+2) is the MTP module’s probability of the token.

- PMain(t^i+2) is the main model’s probability.

- τ is a tunable acceptance threshold.

- where:

- Impact: This strategy allows high-confidence speculative predictions to be used even when they do not perfectly match the main model’s top prediction, reducing rejection rates by over 40%, accelerating inference.

Empirical Gains from DeepSeek-R1’s MTP Enhancements

-

DeepSeek-R1’s refinements to MTP result in significant empirical gains over DeepSeek-V3:

- Training Efficiency: Training convergence improved by 22% due to depth-weighted loss prioritization.

- Inference Speed: Speculative decoding optimizations resulted in a 1.5× faster generation speed.

- Long-Term Coherence: Perplexity on long-form text improved by 18%, showing that the revised token dependency modeling enhances context retention over long horizons.

Comparative Analysis

- DeepSeek-R1 builds upon DeepSeek-V3’s foundational MTP structure while addressing its limitations. The improvements, particularly in adaptive granularity, loss function optimization, and speculative decoding, result in faster, more coherent, and memory-efficient predictions. These refinements collectively enhance DeepSeek-R1’s reasoning capability and inference performance. The table below provides a comparative summary of key MTP features in DeepSeek-V3 and DeepSeek-R1.

| Feature | DeepSeek-V3 | DeepSeek-R1 |

|---|---|---|

| Sequential MTP Modules | ✅ Structured pipeline with sequential depth modules | ✅ Enhanced with cross-depth residual connections |

| Shared Embeddings for MTP | ✅ Shared token embeddings across modules | ✅ Further optimized with depth-conditioned routing |

| Prediction Granularity | ❌ Fixed number of future token predictions per module | ✅ Adaptive token horizon based on sequence complexity |

| Loss Function Optimization | ❌ Uniform loss weighting across MTP depths | ✅ Depth-aware weighting for optimized learning |

| Memory Optimization Strategy | ✅ Shared output heads for reduced memory footprint | ✅ Further improved with depth-conditioned layer sharing |

| Inference Speed Boost via MTP | ✅ Basic speculative decoding | ✅ Probabilistic speculative decoding, reducing rejection rates by 40% |

| Training Efficiency Improvement | ✅ 15% increase in data efficiency | ✅ 22% faster convergence with improved loss prioritization |

| Long-Term Coherence in Predictions | ✅ Improved over next-token prediction models | ✅ 18% better perplexity in long-form text |

| Speculative Decoding Acceptance Strategy | ❌ Strict token match required for validation | ✅ Probabilistic validation based on confidence threshold |

| Impact on Latency Reduction | ✅ Moderate improvement in decoding speed | ✅ 1.5× faster inference due to reduced rejection rates |

Implementation Details

-

DeepSeek-R1 incorporates an advanced MTP strategy to boost decoding efficiency and reduce latency. Unlike traditional autoregressive decoding, where each token is predicted sequentially, MTP allows multiple tokens to be predicted per decoding step. This is achieved through a hierarchical approach that balances performance improvements with the risk of error propagation. Specifics below:

- Multi-Layer Representation Propagation:

- DeepSeek-R1’s transformer architecture is enhanced to support simultaneous token prediction across multiple layers.

- Each layer in the model computes token probabilities independently while maintaining consistency across the sequence.

- Speculative Decoding with Verification:

- During inference, DeepSeek-R1 generates speculative multi-token sequences and verifies their coherence through a hierarchical token verification mechanism.

- This approach dynamically adjusts the number of tokens predicted in each step based on confidence scores, ensuring that low-confidence tokens are reevaluated before finalizing outputs.

- Training Objective:

- The model is trained with a combination of standard cross-entropy loss for next-token prediction and an auxiliary loss that encourages parallel token prediction.

- The loss function is formulated as:

LMTP=λ∑k=1DLCE(Pk,Tk)- where D is the number of parallel tokens predicted per step, and LCE represents the cross-entropy loss for each predicted token.

- Adaptive Token Selection with RL:

- DeepSeek-R1 employs an RL-based approach to refine multi-token predictions, ensuring that higher-quality token sequences are prioritized.

- The RL framework assigns rewards based on coherence, fluency, and alignment with ground-truth data.

- This RL-driven strategy effectively reduces hallucinations and improves long-range coherence in generated text.

- Memory and Compute Efficiency:

- The MTP module is optimized to minimize additional memory overhead, leveraging weight-sharing mechanisms within transformer layers.

- The speculative decoding mechanism integrates efficiently with DeepSeek-R1’s caching strategy, ensuring that redundant computations are avoided.

- Multi-Layer Representation Propagation:

Mathematical Formulation

- The prediction function follows an autoregressive formulation:

| P(yt | x)=∏t=1TP(yt | y<t,x) |

- By introducing parallel decoding, DeepSeek-R1 reduces inference complexity from O(T) to O(Tk), where k is the number of tokens predicted per step.

Training Pipeline: from Pre-Training to Reasoning

- DeepSeek-R1 employs a multi-stage training pipeline designed to enhance reasoning capabilities while maintaining efficiency. This process includes distinct phases, each guided by task-specific loss functions and reward mechanisms, ensuring progressive refinement in performance. The key stages are SFT, RL, Rejection Sampling, and an additional RL phase for generalization. Together, these steps improve DeepSeek-R1’s ability to tackle complex reasoning tasks while ensuring clarity and coherence in its outputs.

- DeepSeek-R1’s training process unfolds in four key phases, each progressively refining its reasoning ability while expanding generalization and alignment:

- Cold Start with SFT

- Fine-tuning on thousands of high-quality Chain-of-Thought (CoT) examples to establish structured reasoning.

- Uses a structured output format for improved readability.

- Employs a cross-entropy-based loss function for optimization.

- RL with GRPO

- Policy optimization via Group-based Reward Normalization (GRPO).

- Rewards assigned based on accuracy, format consistency, and language alignment.

- Prevents reward hacking by avoiding neural reward models.

- Rejection Sampling & Expanded SFT

- Filters high-quality RL outputs to enhance supervised fine-tuning.

- Expands training data to include non-reasoning tasks, ensuring broader applicability.

- Final RL Phase for Generalization

- Integrates diverse task distributions, extending beyond structured reasoning.

- Ensures alignment with human feedback, particularly in conversational settings.

- Cold Start with SFT

- Through this multi-stage refinement process, DeepSeek-R1 surpasses previous models in accuracy, coherence, and real-world usability, setting a new benchmark for AI reasoning capabilities.

Stage 1: Cold Start with SFT

Fine-Tuning with High-Quality Chain-of-Thought (CoT) Examples

- DeepSeek-R1 begins its journey by fine-tuning the DeepSeek-V3-Base model with a carefully curated dataset of high-quality Chain-of-Thought (CoT) examples. These examples are obtained through a combination of:

- Few-shot prompting: Generating detailed reasoning paths using large-scale pre-trained models.

- Manual annotation and refinement: Filtering and refining reasoning steps through human reviewers.

- Post-processing DeepSeek-R1-Zero outputs: Extracting well-structured reasoning paths from the RL-trained precursor model.

- The fine-tuning step ensures that DeepSeek-R1 has a structured reasoning framework before entering RL. Unlike DeepSeek-R1-Zero, which learned reasoning solely from RL, DeepSeek-R1 leverages cold-start fine-tuning to avoid the chaotic early stages of RL training.

Structured Output Format

- One of the key issues encountered in DeepSeek-R1-Zero was language mixing and poor readability. To address this, the fine-tuning phase enforces a structured reasoning format:

<span><reasoning_process></span> Step-by-step explanation of the problem-solving approach <span></reasoning_process></span>

<span><summary></span> Final Answer <span></summary></span>

- This format ensures readability and helps align the model’s outputs with human expectations.

Loss Function for SFT

-

The model is optimized using a supervised cross-entropy loss:

LSFT=−∑i=1nlogPθ(oi q,{o1,…,oi−1}) - where:

- oi is the ith token in the output sequence,

- q is the input query,

- o1,…,oi−1 are previously generated tokens.

- where:

-

This step helps DeepSeek-R1 establish a strong foundation for structured reasoning before RL.

Stage 2: RL

- RL is the backbone of DeepSeek-R1’s reasoning evolution. The model learns to optimize its reasoning trajectories based on reward-driven feedback mechanisms, leading to significant improvements in accuracy and coherence.

DeepSeek’s RL Methodology: a Conceptual Overview

- DeepSeek’s RL methodology is fundamentally inspired by self-play paradigms, akin to training AI models in games like chess. Traditionally, AI models trained for complex reasoning tasks leverage large datasets composed of human-annotated examples. However, such datasets often lack comprehensive coverage and may not contain optimal solutions. RL circumvents this limitation by allowing AI models to explore solutions autonomously, refining their strategies based on reward-driven feedback mechanisms.

- Consider an AI model trained to play chess. Instead of learning from a fixed dataset of historical games, the AI is programmed with only the fundamental rules of chess. It then engages in self-play, continuously experimenting with various moves. Initially, the model executes suboptimal actions, leading to losses. However, through iterative play, it identifies effective strategies and reinforces moves that contribute to victories while discarding ineffective ones. This trial-and-error process, governed by RL principles, enables the AI to develop strategies surpassing human intuition.

- DeepSeek applies this RL-based approach to reasoning-intensive domains, such as mathematical problem-solving. Rather than training on explicit mathematical derivations, the AI is provided with fundamental mathematical rules and tasked with solving problems autonomously. The model systematically explores various solution paths, reinforcing those that yield correct answers while discarding ineffective methodologies. Over time, this process enhances the AI’s mathematical reasoning abilities beyond traditional supervised learning approaches. The self-improving nature of RL fosters the discovery of novel problem-solving strategies, resulting in superior performance in mathematical reasoning and logic-based tasks.

Policy Optimization: Background

- Policy optimization involves an RL framework refining an agent’s decision-making process to maximize expected rewards.

- Traditional methods like REINFORCE provide a fundamental approach to learning policies directly from sampled trajectories, while more advanced techniques like Proximal Policy Optimization (PPO) introduce stability constraints.

- Group Relative Policy Optimization (GRPO) builds upon these foundations, addressing key limitations to enhance efficiency and stability in large-scale applications. GRPO can be seen as a hybrid between REINFORCE and PPO, integrating the variance reduction of PPO with the simplicity of direct policy gradient updates from REINFORCE, making it a promising alternative for reinforcement learning in large-scale language model training.

The REINFORCE Algorithm

- Before discussing GRPO, it is essential to understand REINFORCE, one of the earliest and simplest reinforcement learning algorithms.

What is REINFORCE?

-

REINFORCE is a policy gradient method that updates a policy network based on complete trajectories sampled from the environment. It follows a straightforward approach:

- Sampling Trajectories: The agent interacts with the environment, generating an episode (a sequence of states, actions, and rewards).

- Reward Calculation: A single reward is assigned to the entire episode.

- Policy Update:

- Compute the gradient of the policy based on the log probability of actions taken.

- Scale the gradient by the total episode reward.

- Update the policy network using gradient descent.

Limitations of REINFORCE

- High Variance: Since rewards are computed for entire episodes, updates can be noisy.

- Unstable Learning: Policy updates can be drastic, leading to instability.

- Lack of Baseline Correction: REINFORCE does not normalize rewards, making training inefficient.

How GRPO Builds on REINFORCE

- GRPO modifies REINFORCE by:

- Using Group-Based Advantage Estimation: Instead of relying on a single episode reward, GRPO normalizes rewards within a group.

- Introducing a Clipped Loss Function: Prevents large policy updates.

- Reducing Variance: By averaging multiple sampled responses, GRPO provides a more stable policy update mechanism.

- By addressing these weaknesses, GRPO combines the simplicity of REINFORCE with the stability of modern policy optimization techniques.

Proximal Policy Optimization (PPO)

- Proximal Policy Optimization (PPO) is a widely used RL algorithm in RLHF, particularly in LLMs. PPO is an actor-critic method designed to optimize a policy while ensuring stable updates by limiting drastic deviations from previous policies.

- For a detailed discourse, please refer our PPO primer.

How PPO Works

- PPO requires three primary components:

- Policy (πθ): The LLM being fine-tuned.

- Reward Model (Rϕ): A frozen network providing scalar feedback on complete responses.

- Critic (Vγ): A trainable value function predicting future rewards for partial responses.

- PPO follows an iterative workflow:

- Response Generation: The model generates multiple responses per prompt.

- Reward Assignment: The reward model scores each response.

- Advantage Computation: The advantage function estimates how much better an action is compared to average actions.

- Policy Optimization: The LLM is updated to maximize the advantage function using PPO’s clipped objective.

- Critic Update: The value function is trained to improve reward prediction.

Challenges with PPO

- High Computational Cost: PPO requires a separate critic model, which doubles memory requirements.

- Training Complexity: The critic must be updated in tandem with the policy, making training unstable.

- Potential Bias: The critic can introduce estimation biases, affecting policy optimization.

- These limitations motivated the introduction of Group Relative Policy Optimization (GRPO) by DeepSeek AI as part of DeepSeekMath.

How GRPO Builds on PPO

- GRPO addresses PPO’s limitations by replacing the critic with a group-based reward normalization mechanism, reducing computational overhead while maintaining sample efficiency.

- Unlike PPO, which relies on a critic to estimate future rewards, GRPO directly normalizes rewards within a group of responses to compute an advantage function, eliminating potential biases introduced by the critic.

- PPO’s clipped objective function is retained in GRPO, ensuring stable policy updates and preventing overly large parameter shifts.

- By avoiding the need for a separate critic model, GRPO reduces memory and compute costs, making it more scalable for large-scale training.

- The combination of group-based reward normalization and clipped policy updates allows GRPO to achieve comparable stability to PPO while being computationally more efficient.

- A comparative analysis of REINFORCE, PPO, and GRPO in terms of critic model usage, compute cost, stability, advantage estimation, and training complexity, highlighting GRPO’s high stability and PPO’s high compute cost.

| Feature | REINFORCE | PPO | GRPO |

|---|---|---|---|

| Critic Model? | ❌ No | ✅ Yes | ❌ No |

| Compute Cost | Low | High | Low |

| Stability | Low (high variance) | Moderate | High (group normalization) |

| Advantage Estimation | Episode reward | Learned critic | Group-based normalization |

| Training Complexity | Low | High | Moderate |

Group Relative Policy Optimization (GRPO)